If you oversee critical power for a data center or other critical operation, it’s likely that you just don’t have the time and staff you need to effectively monitor data the way you should be. But what you’re doing seems to be working, so why should you worry?

Because in critical power, even a temporary loss of power can result in astronomical costs--and even happen to some of the biggest and most well-financed brands in the world. The Apple store’s March 2015 power outage lasted just 12 hours, but it cost the company $25 million. Facebook’s 14-hour power outage in March 2019 cost them an estimated $90 million. And in August 2016, Delta Air Lines’ power went down for just five hours -- and in those 5 hours, the company lost an estimated $150 million.

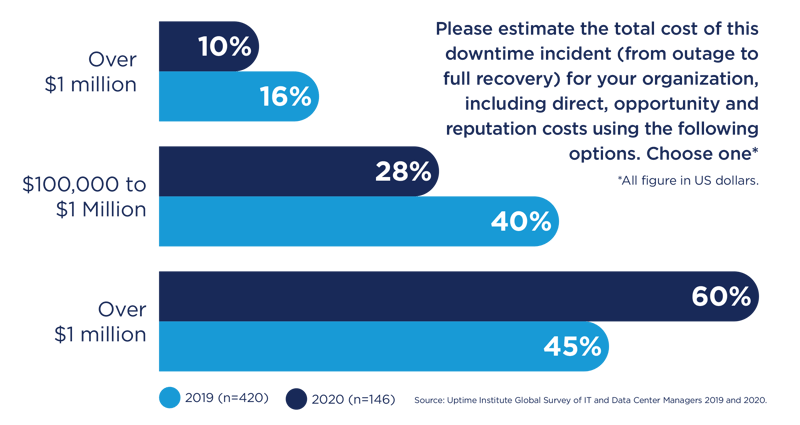

A recent study, the Uptime Institute Global Survey of IT and Data Center Managers 2020, found that over half of outages cost more than $100,000 and 16% are greater than $1 million.

Additionally, the Uptime Institute’s AIRs (Abnormal Incident Report) database, compiled over the past 25 years, showed that electrical failures accounted for 80% of all IT load losses in data centers.

As you can see, the leading cause of outages is power--and it’s especially frustrating because these outages are completely preventable.

After all, most power-related outages are caused by the failure of the “last lines of defense” for most data centers: uninterruptible power supplies (UPSs), transfer switches, and generators.

A better understanding of data can minimize those outages in a few ways, including ensuring your critical power works when it needs to.

System Design

System design should be based on data. You need to know what your facility is using and what’s essential if power goes down. Your critical power and backups should be designed and implemented with this data in mind -- because it’s almost certain that every year, your company will experience multiple shutdowns: full and partial.

A recent Ponemon Institute study found that data center networks are vulnerable to downtime events across the network. Core data centers are experiencing an average of nearly 10 partial shutdowns (isolated to select racks or servers) a year, and an average of 2.4 total facility shutdowns a year, with each of those total shutdowns lasting an average of 138 minutes (or over two hours).

But even though companies know how costly the unexpected downtime caused by these full and partial shutdowns can be, many -- or most -- are still not using best practices in data center design and redundancy to prevent them.

In fact, that same Ponemon Institute study revealed that only 46% of data centers and 54% of edge centers said they utilize all best practices in data center design and redundancy. Why are those numbers so low? It’s not because companies are not aware of best practices, but rather, because they feel they are limited in their ability to apply them due to cost constraints.

But avoiding best practices in system design because of short-term cost concerns can have significant long-term costs. 69% of respondents in the Ponemon study said that their risk of unplanned outages was increased as a direct result of cost constraints.

Maintenance and Reporting

37% of power outages are caused by onsite power issues. It’s likely that some of those outages are at least partially attributable to inadequate system design, but it’s also clear that faulty equipment is another common cause of downtime.

Sometimes, the problem isn’t with the equipment, but rather, with how it is maintained. A 2017 study found that 70% of companies lack complete awareness of when their equipment is due for maintenance or upgrade.

With better data monitoring around equipment maintenance schedules -- and a skilled tech able to track and interpret that data -- companies can prevent a significant percentage of expensive and avoidable outages.

Winning Over Management

The Ponemon study highlights a key barrier to implementing best practices in preventing and managing unplanned outages: convincing upper management.

The study found that only 50% to 51% (for data centers and edge centers, respectively) of respondents believed that their upper management “fully supports our efforts to prevent and manage unplanned outages.”

That’s a big problem, and one that suggests the C-suite needs more data to be convinced. But gathering that data is just another thing on someone’s plate.

Outsourcing data monitoring and getting reports may be the key to building a relationship with upper management that gets you their ear. That’s because it will allow them to focus on what they want to focus on: running their business with the certainty that their critical power systems are reliable and in full working order, and that they can manage their critical power budgets without the threat of wild swings caused by unforeseen events.

Conclusion

When you’re running a mission-critical business, you know that you can’t afford to have your power go down.

Using data to design a critical power system that works no matter what can save you hundreds of thousands of dollars -- and sometimes, much more.

Concentric focuses on data to design and maintain a critical power system, so that you can focus on running your business.